I've been hearing a lot of about the use of AI -- large language models, or LLMs -- like ChatGPT -- in writing. For example, game writing. Couldn't ChatGPT come up with a lot of barks? And quests? And stories? And backstories?

The most important thing to know about ChatGPT is that when you pronounce it in French, it means, "Cat, I farted." ("Chat, j'ai pété.")

Okay, maybe not the most important thing, but surprisingly relevant.

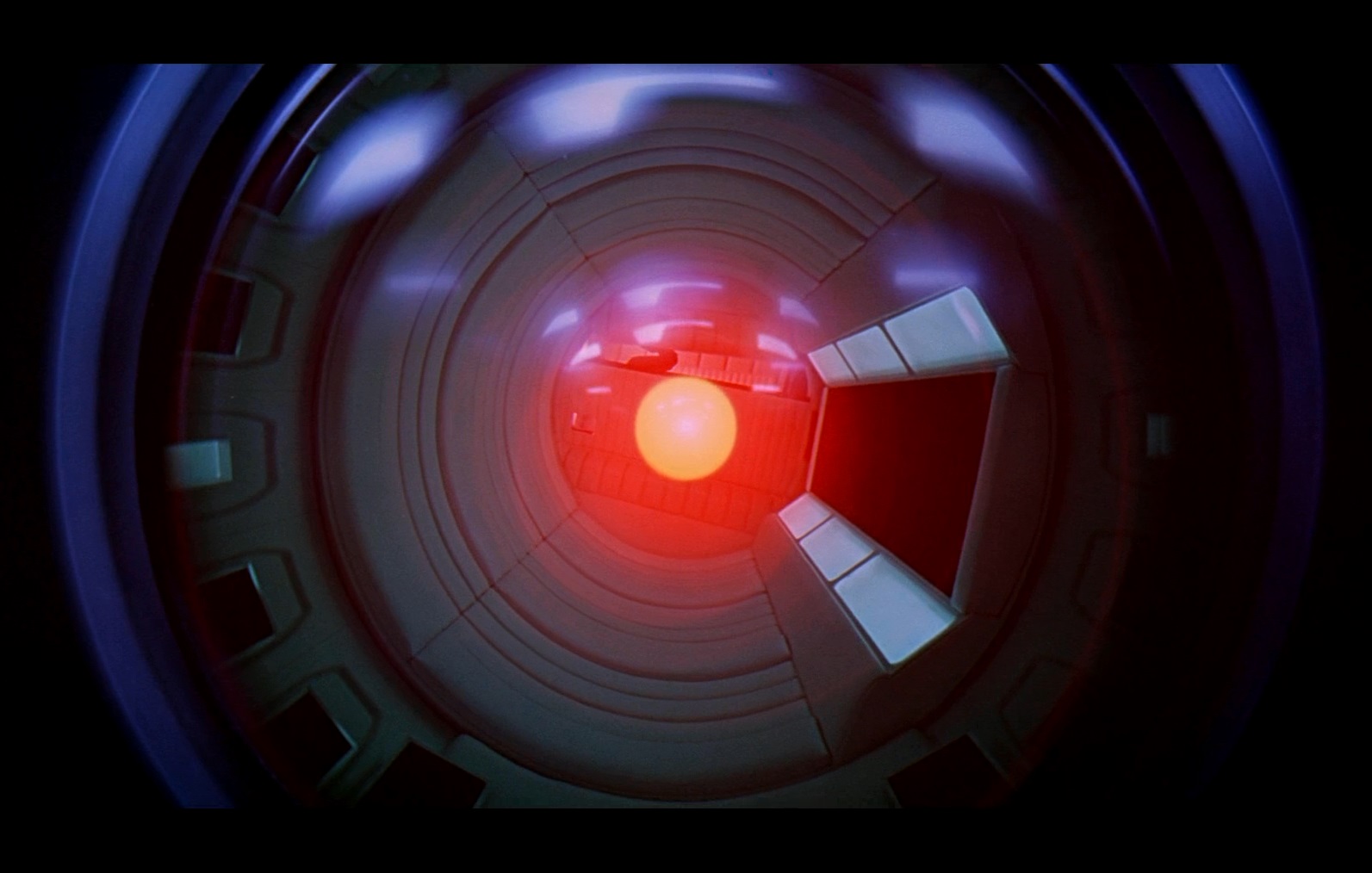

ChatGPT is not "artificial intelligence" in the sense of "the machine is smart and knows stuff." ChatGPT takes a prompt and then searches through an enormous database of writing to figure out what is the most likely answer. Not the smartest or the best answers, just the most likely one.

That means if you ask ChatGPT a question, you are getting the average answer. Don't base your medication on ChatGPT results.

Note that this is *not* how Google answers work. Google's algorithm evaluates the value of a site based on links to other sites -- and rates the links according to the value of those sites. So it is more likely to answer based on what the Encyclopedia Brittanica said than what Joe Rogan said the other day. ChatGPT is more likely to give you Joe Rogan.

What does this mean for writing? ChatGPT will give you a rehash of what everyone else has already done.

Two problems with this:

a. It is a hash. A mashup. ChatGPT does not know which bits of story go with which other bits. It has no sense of story logic. It does not know if it is giving you a good answer or a bad answer. It is not trying to give you a good answer. It is giving you an answer based on how much of one kind of thing or another kind of thing shows up in its database. Its database is probably The Internet.

So, for example, if you asked it what happened at a typical wedding, it might decide that the groom has cheated the night before and the couple broke up -- because people generally do not write on the Internet about happy couples getting hitched without a hitch.

b. It is what everyone else has already done. Good writing comes out of your creativity. Your personality. Your experience of life. It is filtered through who you are. It has your voice. We are not hiring you to give us clams and tired tropes. We are hiring you to come up with something fresh and compelling. Something heartfelt. Something that gets a rise out of you, and therefore might get a rise out of the reader, or the audience, or the player.

If you ask ChatGPT for barks, it will give you the least surprising barks ever. The most average. The more boring.

If you ask it for stories, it will give you stories you've heard before, unless it gives you stories that make no sense that are based on bits and pieces of stories you've heard before.

So, how can you use large language models in your writing?

Simple: it can tell you what to avoid.

Ask it to give you barks. DO NOT USE THE ONES IT SHOWS YOU. Make up other ones.

Ask it to give you plots. DO NOT USE ITS PLOTS. THEY ARE TIRED.

Working with LLMs can teach you to take your writing beyond the expected. It will give you the expected. If you write away from or around what's expected, your writing will become unexpected. Fresh. Original.

Or, you can take its plots and then twist them -- which is what pro writers do all the time. We're not making up plots from scratch all the time. 90% of the time what we write is like something else, but twisted or subverted or redoubled in some way. (This appropriation and adaptation of earlier, better writer's work is called "culture.")

Tl; dr: you can legitimately use ChatGPT and other large language models for writing. But not directly. ChatGPT can only give you (a) nonsense and (b) cheese. But it is useful as a warning. If ChatGPT came up with something, it's probably too tired for you to use it.

PS Another reason not to use LLMs: the content they train on is, generally, whatever they can scrape off the Internet. They can't tell whether the content they train on is human-generated or machine-generated. So, the more LLM content there is out there, the more LLMs are training on LLM output. Initially, you may be getting what a computer thinks a human would say next. But after a while, you are getting what a computer thinks a computer would say next. See the problem?